MoZ Monitor

For my Bachelor's project, I developed the MoZ Monitor, a hybrid tool combining offline and digital elements to improve mentor-mentee engagement and streamline the evaluation process. This solution bridges the gap between technology and human interaction, driving meaningful feedback and real-time insights.

Read more

Product Designer & Developer

Solo

Feb - June 2024

Web, Mobile (PWA)

Overview

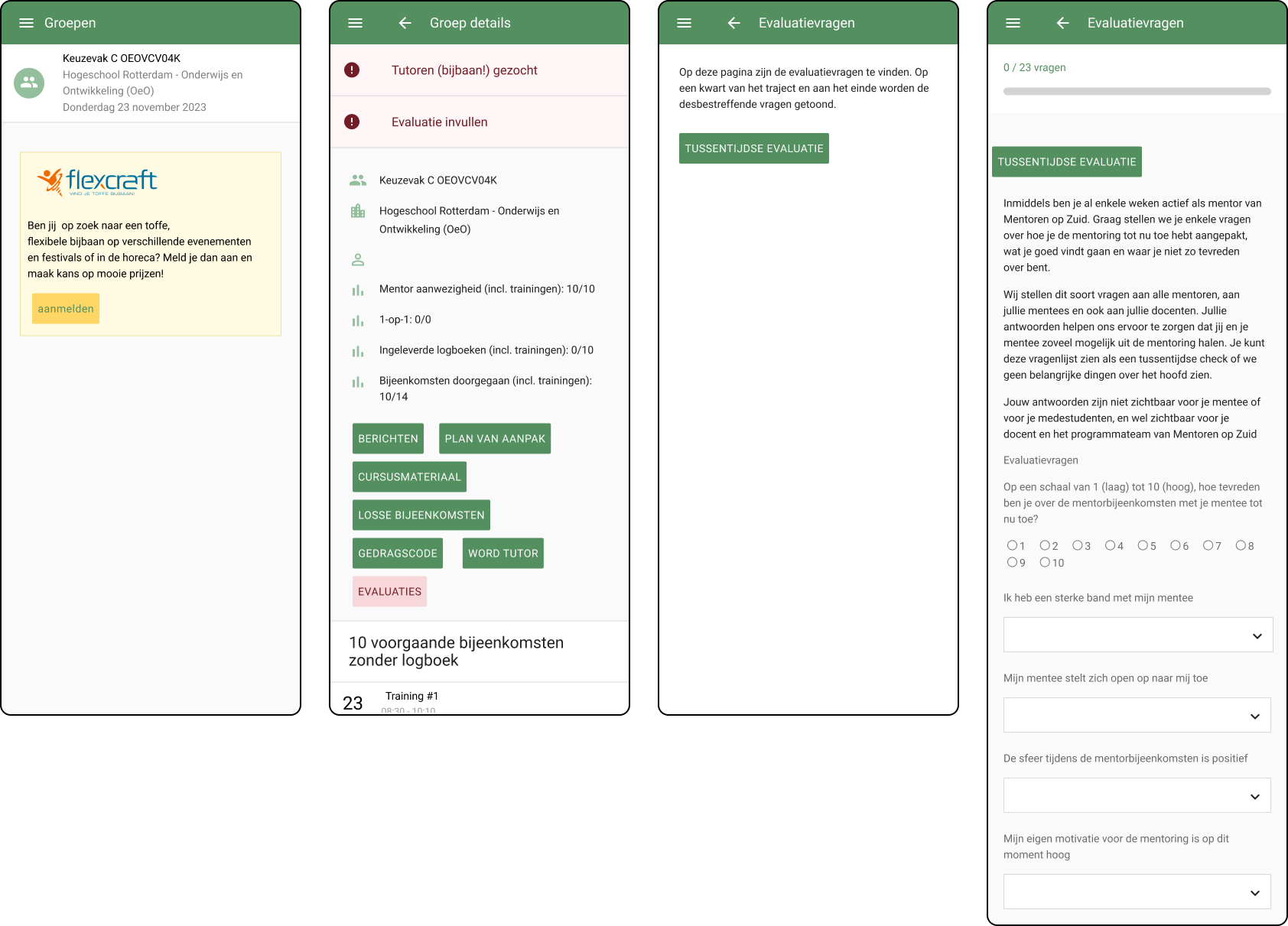

During my internship at Mentoren op Zuid (MoZ), I reimagined the evaluation process to address low engagement from mentors (38.5%) and mentees (5.8%). The existing system, an outdated web app, failed to gather actionable feedback for program improvement.

To address this, I developed MoZ Monitor, a hybrid solution combining offline and digital tools to improve engagement and usability for all stakeholders. This proof of concept was presented to MoZ but not implemented, leaving room for future customization.

This project allowed me to explore innovative ways to bridge analog and digital tools, create a user-centered evaluation system, and ensure meaningful engagement for mentors, mentees, and MoZ coordinators. It also scored a 10/10 on my final assessment!

The Problem

The evaluation system at MoZ struggled with low engagement and poor usability, leading to incomplete feedback and missed opportunities for improvement. The outdated MoZ web app was difficult for mentors to navigate and inaccessible for younger mentees, making evaluations feel disconnected from the mentoring experience.

Key Problems:

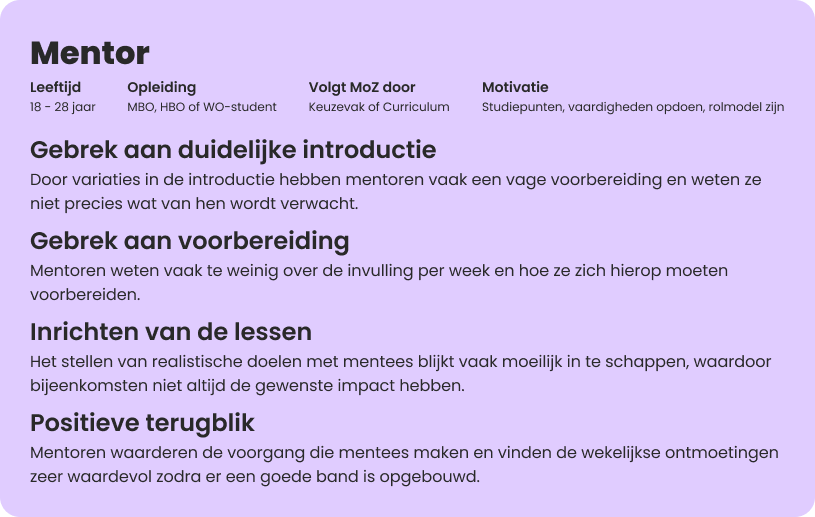

Mentors

Unprepared for their roles, frustrated with the outdated app, and felt evaluations didn't add value to their mentoring experience.

Mentees

The process was not designed with their age group in mind, resulting in low usage of the app and incomplete evaluations.

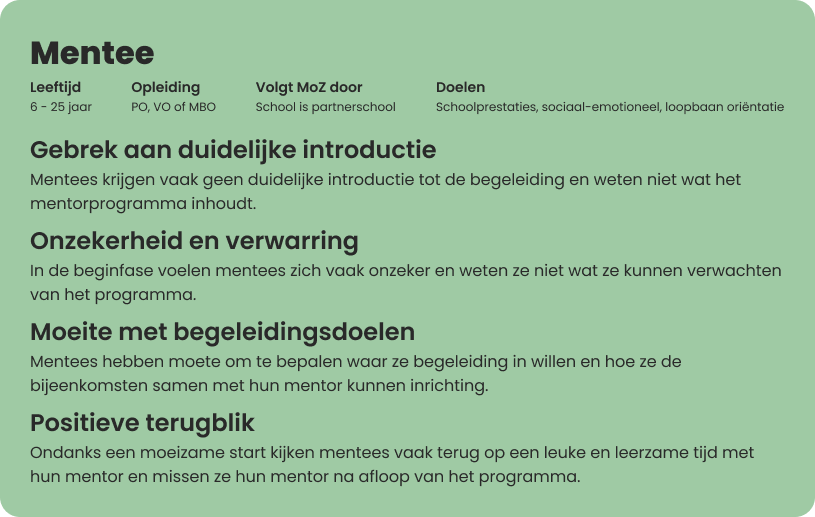

MoZ Coordinators

Lacked usable data to track progress and improve the program, leading to missed opportunities for growth.

Without a user-friendly evaluation system, MoZ struggled to gather meaningful feedback and track program progress effectively. Without actionable insights, MoZ was unable to make data-driven decisions to improve the program and enhance the mentor-mentee experience.

The Solution

The MoZ Monitor was designed and developed to tackle these issues by integrating offline and digital tools. The hybrid system consisted of:

- Offline Evaluation Game: Designed to foster collaboration and engagement between mentors and mentees during sessions, this game-like tool features a logbook and question cards that made evaluations more interactive.

- MentorenApp: A Progressive Web App (PWA) that digitizes handwritten evaluations via image recognition and OCR technology, making the process easier and more efficient.

- MoZ Dashboard: A web-based platform providing MoZ coordinators with real-time insights and customizable reports to track progress and make data-driven decisions.

This solution intergrates insights from extensive user research, co-creation sessions, and iterative design processes to create a system that bridges the gap between technology and human interaction

User Research

To design a system that addressed the challenges MoZ faced, I employed a multi-method research approach:

- Interviews

- I spoke with mentors, mentees, MoZ coordinators, and MoZ teachers to understand their experiences, frustrations, and aspirations.

- Field Observations

- By observing mentoring sessions firsthand, I gained insights into real-world interactions and the dynamics of the mentor-mentee relationship.

- Desk Research

- I reviewed existing research within MoZ and the broader field of mentoring programs as well as best UX practices for diverse user groups.

Key Insights

These insights helped shape the MoZ Monitor solution, ensuring it met the needs of all stakeholders.

Felt unprepared for their roles, frustrated with the outdated app, and lacked clarity on many aspects of the program.

Didn't know what to expect from the program, had difficulty setting goals, and—especially younger mentees—lacked access to the current app due to language and tech barriers.

Lacked an overview of data generated by the current web app, struggled to identify areas for improvement, and were frustrated with the current web app's limitations.

Challenge with the Current Evaluation Process

The current MoZ web app, in use since 2015, no longer met the needs of the program. Key challenges included:

- Outdated Design: Clunky interface that frustrated mentors and coordinators.

- Limited Accessibility for Mentees: The app didn’t account for the age, language, and tech skills of mentees, leading to almost no usage by mentees.

- Unengaging Forms: Long, complex evaluation forms that failed to capture the attention of users.

Ideation

The ideation phase involved co-creation sessions with multiple stakeholders to explore how evaluations could be seamlessly integrated into the mentoring process. The goal of these sessions was to generate ideas that would engage mentors and mentees, improve usability, and provide actionable insights for coordinators.

We brainstormed a range of concepts, from fully digital solutions to more collaborative offline activities, considering the needs of mentors, mentees, and coordinators.

Brainstorm Methods

The following methods were used to generate ideas:

- Post-it Wall: Used to visually organize thoughts and ideas.

- Brainwriting (6-3-5 Method): A technique where each participant wrote down three ideas in five minutes, then passed them to another participant who built upon or added to the ideas. This method encouraged idea development in silence and stimulated new thinking.

The MoZ Monitor Concept

From these concepts, I developed the MoZ Monitor concept, a hybrid solution combining offline and digital tools. The final product consisted of three key components:

- Offline Evaluation Game

- A game-like activity tailored for mentoring sessions.

- Easy-to-answer formats to engage mentees and make evaluations fun and age-appropriate.

- MentorenApp

- A Progressive Web App (PWA) equipped with OCR technology to digitize handwritten evaluations.

- Evaluations would sync to the MoZ Monitor dashboard for real-time analysis.

- MoZ Dashboard

- Coordinators could view evaluation progress, generate customized reports, and identify program improvement areas.

First sketch of the product.

Guidelines & Scope

Design Guidelines

To create a solution that truly met the needs of mentors, mentees, and coordinators, I synthesized insights from my research into actionable design guidelines:

- Intuitive tools for mentors.

- Collaborative, age-appropriate activities for mentees.

- Real-time data access and customizable reporting for coordinators.

Product Scope and Journey

Building on these guidelines, I defined the product scope and mapped out the system’s journey. The product scope focused on integrating offline evaluations with digital data, creating a seamless experience for all users. The Minimum Viable Product (MVP) was designed to prioritize three core functionalities:

- Simplified offline evaluations.

- OCR-powered digitization via the MentorenApp.

- Real-time data visualization through the MoZ dashboard.

Design Process

The design process was iterative, with continuous prototyping and user feedback guiding improvements to both the MentorenApp and MoZ Dashboard. Through user testing with mentors and coordinators, I refined each version to improve usability, engagement, and data insights, ensuring the solution addressed key challenges in the evaluation process.

I created high-fidelity prototypes for both products, which were turned into clickable wireframes. These were tested with end-users, and feedback informed subsequent design iterations.

MentorenApp

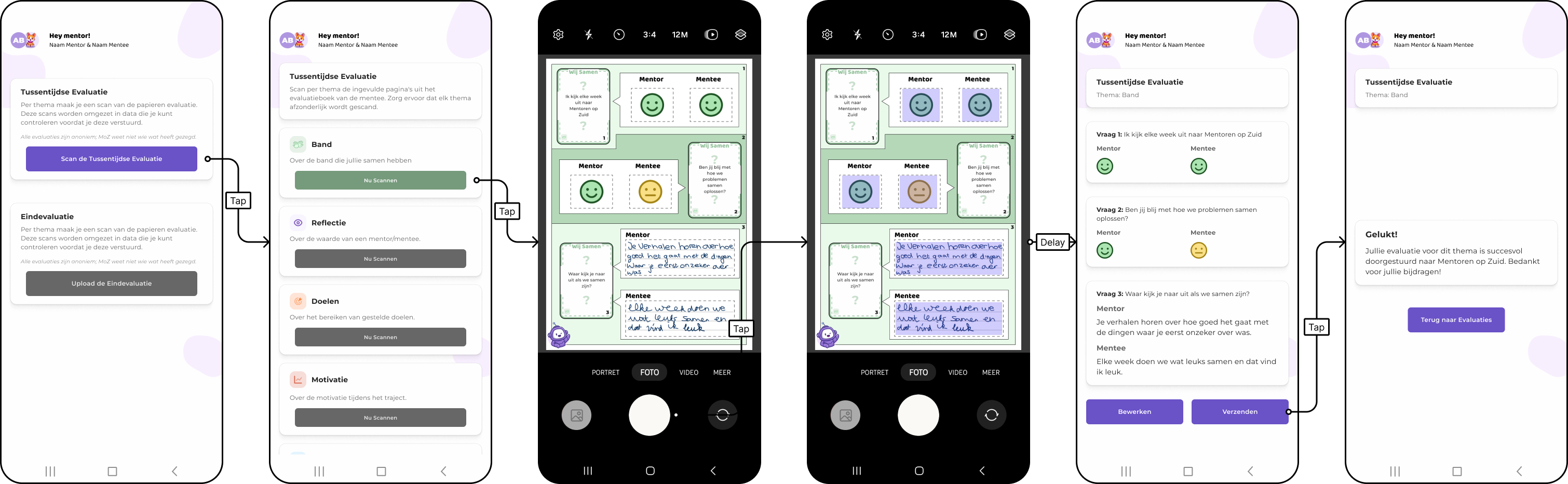

The MentorenApp was designed to digitize evaluations, improve usability, and make the evaluation process more engaging for mentors. Each iteration focused on enhancing functionality, usability, and alignment with mentor expectations.

First Iteration

The first version focused on simulating the digitization process of evaluations through a clickable prototype in Figma. This allowed me to mimic the evaluation process and gather valuable feedback from mentors.

Onboarding (First Iteration Only)

To ensure that mentors could fully grasp the app’s potential during testing, I designed an onboarding process to guide users through key features, such as linking mentees to their accounts. Although onboarding was not part of the MVP scope for development, it provided valuable context during testing and showcased the app’s broader potential.

Feedback:

Positive

Mentors found the app easy to use, with a visually clear design and appreciated the OCR feature for digitizing evaluations.

Areas for Improvement

Mentors felt the app lacked additional features that would make it more useful beyond just digitizing evaluations. They expressed that the app would not motivate regular use without more value-added functionality.

First iteration of the MentorenApp.

Next Steps

To improve engagement, a program overview feature was proposed to give mentors a clearer sense of their tasks. This feature would be included in the next iteration of the MentorenApp.

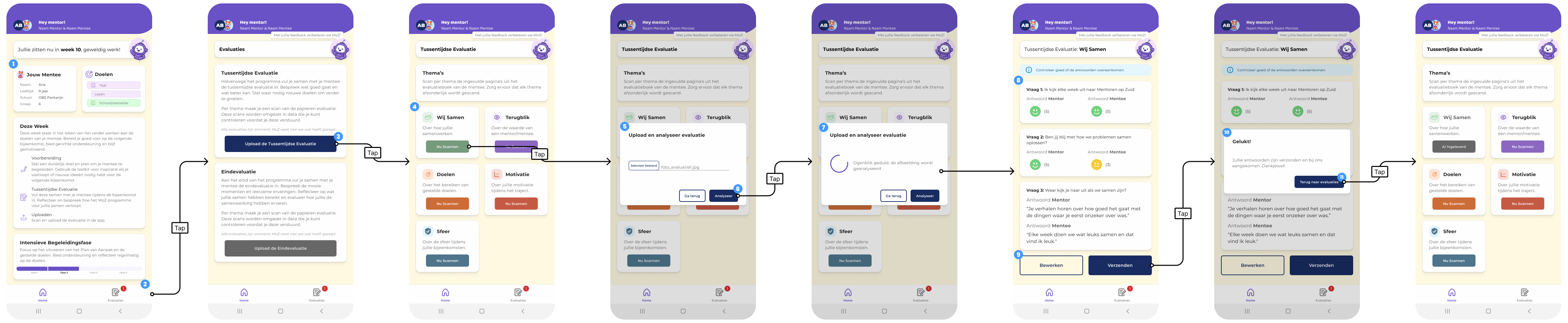

Second Iteration

In response to feedback, the second iteration added a home page with a weekly task overview. Navigation was also streamlined to improve usability. Combined with the new styling MoZ had adopted to align with the University of Applied Sciences Rotterdam (Hogeschool Rotterdam), the app got a visual overhaul.

Changes:

- New home page with weekly overview.

- Simplified navigation for improved usability.

Feedback:

Positive

Mentors appreciated the new layout and found the app easier to navigate.

Next Steps

Start development of the final product, incorporating the new features and design improvements from the second iteration.

Second iteration of the MentorenApp.

MoZ Dashboard

The MoZ Dashboard was designed to provide coordinators with real-time insights into evaluation data and customizable reports for stakeholders.

First Iteration

The first iteration focused on creating a visual representation of the dashboard, showing the average scores per theme and the response rate of evaluations. This protoype was tested with MoZ coordinators to gather feedback on the design and functionality.

Feedback from MoZ coordinators who tested the first iteration of the MoZ Dashboard included:

Positive

Coordinators found the dashboard intuitive and easy to navigate.

Areas for Improvement

They requested options for customizing reports and more detailed insights into evaluation data.

Next Steps

Start development of the final product, expanding the features of the MoZ Dashboard to include more customization options for reports. The 'Rapportages' page would be fully developed in the next iteration, leaving the overview page as a won't-have for the MVP.

Wireframes of the MoZ Dashboard.

Final Product

I developed the MoZ Monitor as a Progressive Web App (PWA) using ReactJS, offering a modern, scalable, and responsive solution to MoZ’s evaluation challenges. This system was designed to integrate offline and digital tools, ensuring usability for mentors, mentees, and coordinators alike.

As part of the offline solution, I designed a logbook and a question card deck for the evaluation game. These were professionally printed to strengthen the proof of concept. The logbook allows mentors and mentees to record evaluations during sessions, while the question cards make the process more engaging and collaborative.

Key Components

The final product consisted of three interconnected tools:

- Offline Evaluation Game: Encouraging collaborative, engaging evaluation sessions between mentors and mentees.

- MentorenApp: Digitizing handwritten evaluations through OCR technology and syncing them to a central database.

- MoZ Dashboard: Providing real-time insights and customizable reporting for coordinators to track progress and analyze data effectively.

The MentorenApp.

The MoZ Monitor Dashboard.

Recommendations

To support MoZ in implementing and scaling the MoZ Monitor, I provided the following recommendations:

For Implementation

- Pilot Testing: Conduct pilot implementations at a small selection of schools to test the combined use of offline and digital tools in real-world scenarios.

- Session Integration: Encourage mentors and mentees to use the logbook and evaluation game during mentoring sessions to make evaluations feel natural and engaging.

For Further Development

- Secure Login Systems: Implement SSO (Single Sign-On) for both the MentorenApp and MoZ Dashboard to ensure user data security and seamless access.

- Enhanced OCR Technology: Explore the use of machine learning to improve emoji recognition and ensure accuracy in digitizing handwritten responses.

- Improved Notifications: Introduce in-app reminders for mentors to complete evaluations, increasing response rates.

For Research and Future Features

- Broader Dashboard Access: Evaluate making the MoZ Dashboard accessible to teachers or mentee schools for greater transparency and collaboration.

- Logbook Iterations: Conduct further research into how the logbook can be adapted for different age groups or levels, ensuring inclusivity and effectiveness.

Reflections

During my internship at Mentoren op Zuid (MoZ), I took full ownership of redesigning the evaluation process, balancing offline and online tools. Combining analog tools like the logbook and question cards with the MentorenApp and MoZ Dashboard resulted in a more flexible, inclusive solution. Managing the entire project solo—responsible for research, design, development, and testing—was challenging but helped me develop critical project management, communication, and problem-solving skills.

Key Takeaways

- User-Centered Design is Key

- Involving users at every stage—from ideation to testing—was critical to designing solutions that genuinely addressed their needs.

- Offline and Online Can Coexist

- Blending analog and digital tools created a more flexible and inclusive evaluation process, making it accessible to mentees while still generating actionable data for coordinators.

- Managing a Project Solo

- Taking on the entire project allowed me to develop strong project management skills, balancing multiple tasks, making independent decisions, and effectively communicating design choices to stakeholders, even with limited resources.

Future Vision

If I were to continue, I'd focus on refining the integration of offline and digital tools to enhance the mentor-mentee experience and explore additional gamification features to ensure long-term engagement. The MoZ Monitor holds great potential for transforming mentoring programs through innovative evaluation methods.